Photo by Alexander Shatov on Unsplash

What the hell are the architectures of scalable streaming applications?

Here's some common WebRTC jargon to know:

TURN server - A TURN server acts as an intermediary that relays packets of media data from one device to another. By using these relays, the TURN server can work around firewalls and other security measures that prevent devices from making a direct connection.

STUN server - The STUN server allows clients to find out their public address, the type of NAT they are behind and the Internet side port associated by the NAT with a particular local port.

The WebRTC flow?

Signaling Server Setup:

You set up a signaling server (e.g., using WebSocket or HTTP) to facilitate communication between peers.

Peers exchange metadata and negotiate connection details through the signaling server.

Browser Setup:

Two users open a webpage in their browsers.

Each browser uses JavaScript and the WebRTC API to capture video and audio from the user's camera and microphone.

Signaling:

- The browsers send signaling messages to the server to exchange information about their capabilities and to establish a peer-to-peer connection.

Peer Connection:

- Once signaling is complete, the browsers establish a direct peer-to-peer connection for video and audio transmission.

Real-Time Communication:

- Video and audio data are transmitted directly between the browsers without passing through a central server (after the initial setup).

Viewer Interaction:

- The browsers continuously exchange data, providing a real-time, low-latency video call.

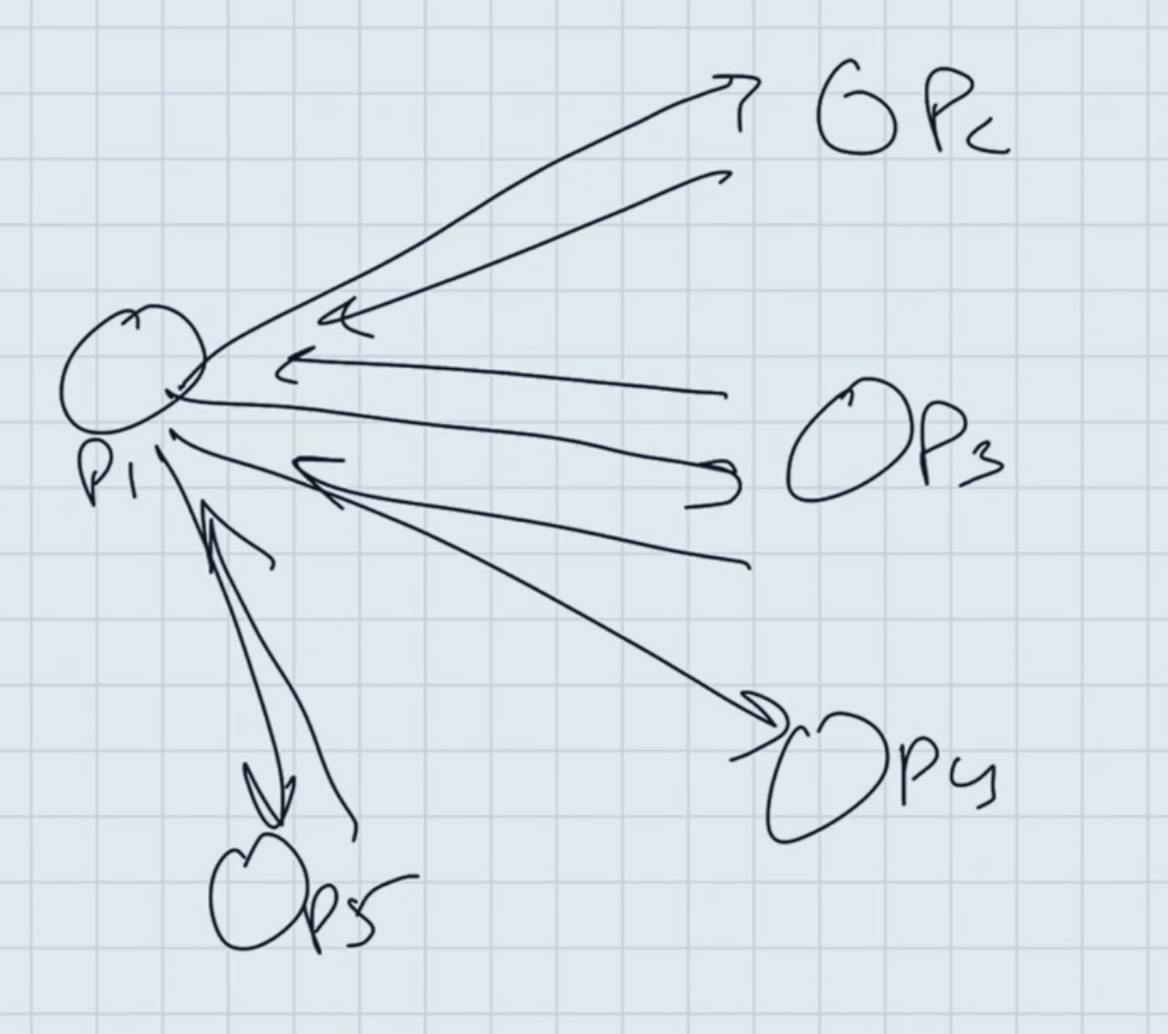

But this system does not scale well at all.

Video is 30fps 30 images per second, which is 30 images over a wire, if total is 1 mbps. if there’s 10 people, i send 10 mbps. if there’s 20, i send 20. can my mini machine handle this much? probably not.

I also need to recieve 20 mbps if there’s 21 people in the call, which my mini machine most likely cant handle this much download of media per second.

This is just one problem. The other problem is also that usually by default, a fiull frame is first sent, and then its usually the ‘diffs’ (differences) that are sent, and these are encoded pieces of data that get added onto the orignal frame, and undergo decoding at the destination.

This means that every video im sending out (30 fps) is having frames being encoded to just sent diff which is a very expensive operation on the CPU, and also decoding videos that are being recieved is very expensive.

Solution?

SFU (selective forwarding unit)

Zoom uses this, Gmeet uses this.

Zoom doesnt use it for its webinar stucture to support 1000+ users though. This also seen in when instagram live’s are one person only, there’s a 5-10 second delay/latency. But, if i invite someone to a live, suddenly there is no latency because we switch to a WebRTC based architecture.

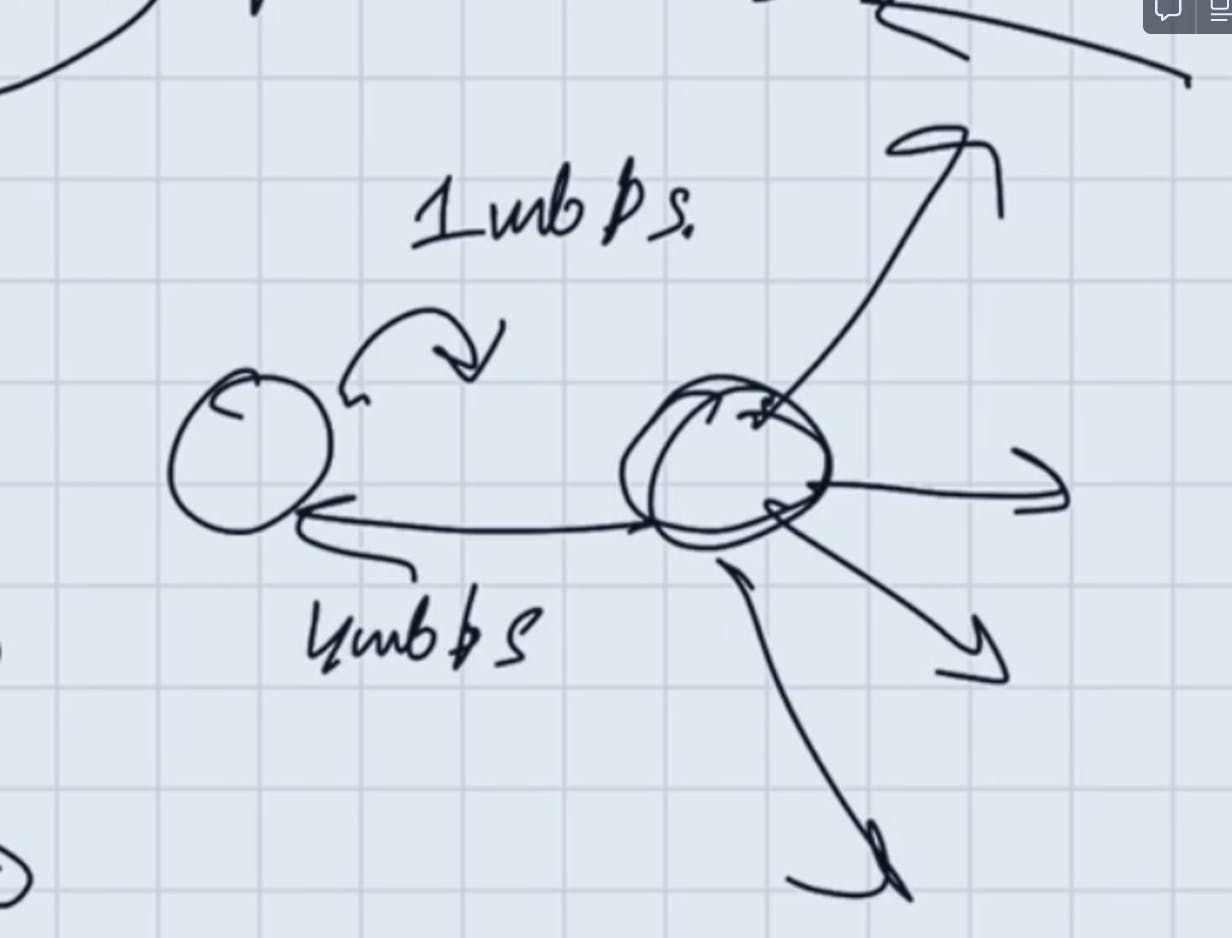

For the soltution, what we could do is that we’d have a server in the middle which would just relay videos we send out to all users who need to recieve it.

So now my net output from my system is just 1mbps since im relaying videos and not sending 1mbps to each person in the room.

What about the downloading other’s part?

For that, its similar to how zoom allows only 15 (for eg ie) users on screen even if there’s 50 people in the meeting. So now, youd only have 15mbps video coming instead of 50 since thats all the server in the middle sends to you.

SFU:

Problem: P2P webRTC doesnt scale.

A Server is put in the middle, and it selectively forwards it to whoever needs it.

Simulcast.

Simulcast basically says that i send out 2 variations of my vdieos, a 0.5 mbps, and a 1.5 mbps. now while my sending out did go up to 2 mbps, if the reciever’s screen for eg has me just very small on screen, i dont need thhe bogger better more compute intensive video to be displayed, and could just have the 0.5 mbps downloaded on the reciever’s end

So effectively the architecture is to have a very high compute server in the niddle which can support about 1GbPS of compute, which takes in your video and relays it to people who need to see it (selective forwarding unit), and the videos you need to see are relayed to you.

There is no distributed computing here, all users connect to the same server.

The math:

If the server supports 1 Gpbs of compute, firstly, 300 videos each of 1 mbps hit the server which is 300 mbps of data, and also in terms of what leaves the server is what i ask for the server, whic .is 5 videos, all of 0.5 mbps is our assumption (the lower variation of a video), and this is asked by 300 users which totals to a little above 1 Gbps.

Having a distributed SFU system is indeed possible, its just that there’s quite a few complications with video and debugging an issue when video stream is travelling from one server to another is very unoptimal.

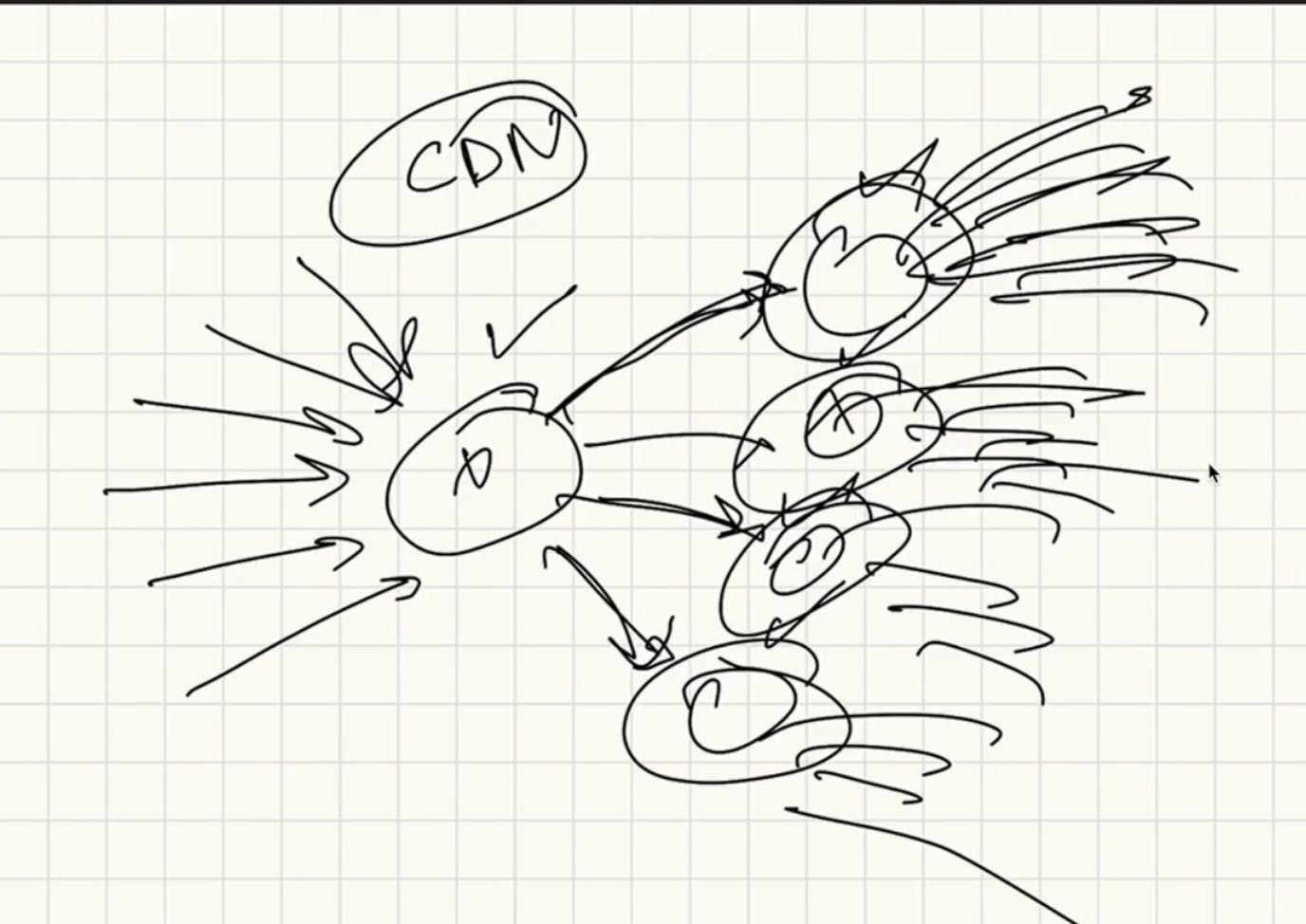

Also, for every n videos sent to the server, it needs to send out almost n^2. hence, an optimal architecture could be:

the first server recieves n videos, and these n videos are just relayed to x servers, which means that now i only send to n/x people. (This could also change on the basis of request(s) from a region just how CDNs work.

The math:

INITIALLY: if 3 people sent video, all the 3 people needed to be sent others videos too, so it would be (3-1) which is the number of video to be sent to 3 people, hence (3-1)3 which is n(n-1)

Hence, if we have 300 people on the call, it would be (300-1)*300

Hence, if we created a tree like architecture, suddently that number of people request video from a server will reduce since there’s 4 of them, and one only need sto send to 75, hence making the equation 300-1 * 75

MCU:

We solved the video problem by saying that i only want to see 50 people on my screen, and rest videos by SFU i dont see. bUT This doesnt exist for audios.

MCU gets all audios, decodes them, the loudest audios are mixed and decoded and then sent as an audio stream. it is a very heavy operation on compute. This cant be done for video obviously because its 10x more compute intensive. How will you even mix video btw?

Best architecture:

Audio of MCU

SFU for video

How does SFU know which version of video to forward?

Bandwidth feedback, the client requesting for the video stream every few seconds sends its bandwidth and then SFU decides what to send

The other times the end client sends what video they want based on for eg if their google meet layout is the everybody smallest video then you recieve the smallest quality vid

Hence, when you pin the person, for a second bad quality and then you suddenly see good quality

Why cant i use any of this for livestreaming on YT/Twitch?

It is because even thought theoretically there’s just one producer who produces video to the SFU and the rest are consumers, even then a distributed system with SFUs would find it very hard to handle a 30k live viewers, etc.

This is why live streaming doesnt typicaslly use WebRTC.

WebRTC other than load reasons also isnt best for YT/hotstar live streaming, because it uses UDP, which means that a continuous stream of high quality is impossible, and jitteriness would exist very heavily.

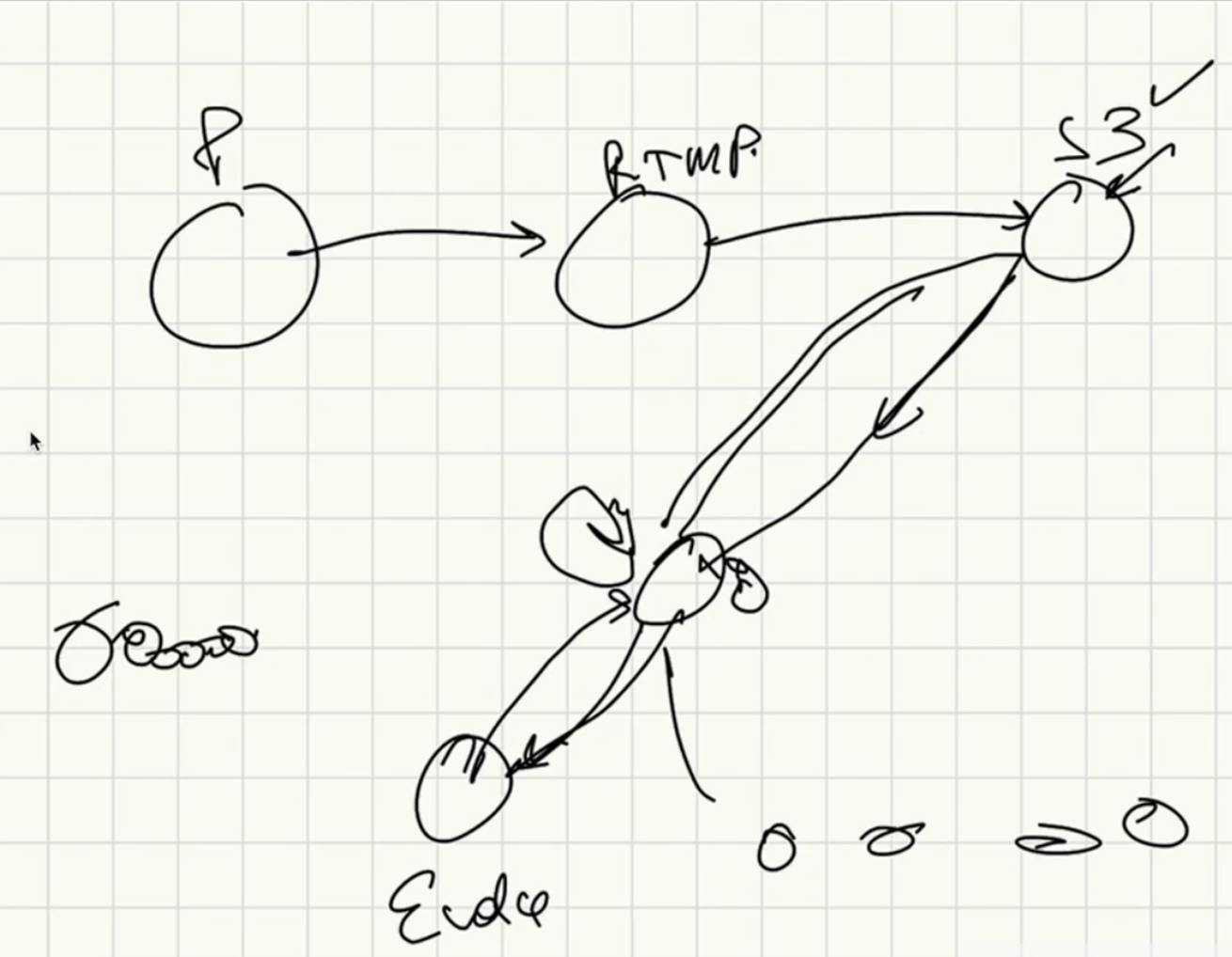

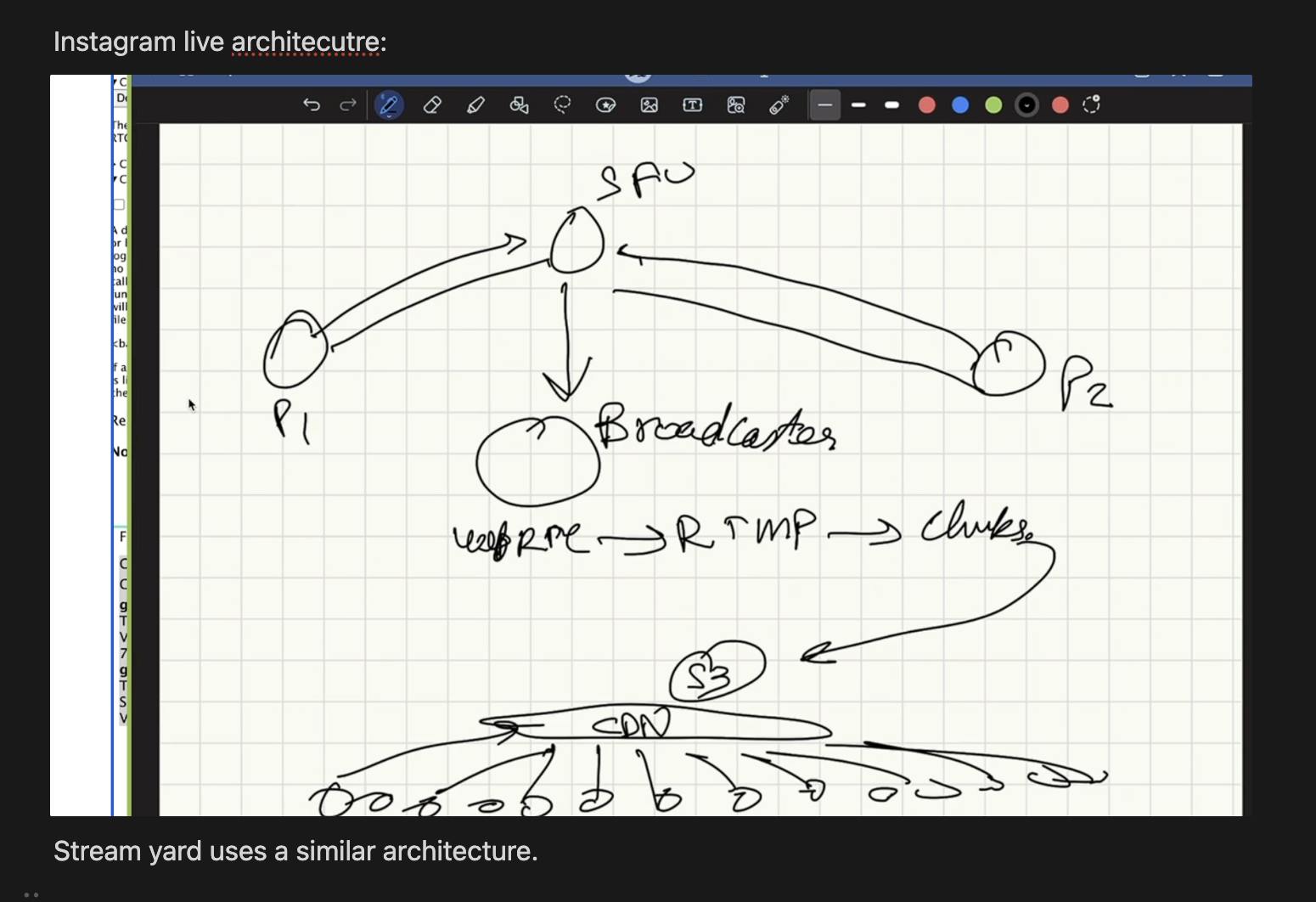

Youtube’s architecture: user inputs video in RTMP to a RTMP server, which transcodes the video and uploads chunks and m3u8 files to S3, which are then request from CDNs. If they arent present there, they’re taken from S3 and cached into the CDN.

Youtube's Architecture:

The livestream begins with a video source, which is often a camera or a software encoder running on a computer. This source generates a continuous stream of video and audio data.

The video and audio data from the source are then encoded and sent to YouTube's servers using RTMP. RTMP is the protocol which has certain encoded data format transmission, which is what the encoding takes place for here. The RTMP server is responsible for receiving this stream and forwarding it to the appropriate components for further processing.

Transcoding: Once the RTMP stream reaches YouTube's servers, it undergoes transcoding. Transcoding involves converting the incoming video stream into multiple formats and bitrates to accommodate viewers with different internet speeds and devices. This ensures a seamless viewing experience for a diverse audience.

Chunking: The transcoded video stream is divided into small chunks. This process is done to facilitate adaptive bitrate streaming, where the viewer's device can dynamically switch between different quality levels based on the available network conditions.

Storage: The chunks are then stored in object storage (like Google Cloud Storage or similar infrastructure). This storage allows for efficient retrieval and delivery of video content.

CDN Distribution: CDNs are used to distribute the video chunks to viewers worldwide. CDNs consist of servers strategically located in various locations to reduce latency and improve streaming performance. Viewers connect to nearby CDN servers to retrieve video chunks.

Viewer's Device: The viewer's device receives the video chunks from the CDN and plays them in sequence, creating a continuous livestream.